How the Coronavirus Pandemic has Strained Quality Standards for Data Visualisation

More than probably ever before these times of Corona, data visualisation has an influence on opinion formation and public sentiment. At the same time, the dynamic circumstances of the data and sources leads us who visualise data to turn a blind eye here and there when it comes to meeting common quality standards. Despite this, how do we manage to strike a balance between accuracy and comprehensibility? A year of pandemic reporting in retrospect.

The year 2020 was a special one for data visualisations. The coronavirus pandemic made it arguably the most numbers-heavy in recent memory. Data journalists, statisticians, politicians and ordinary citizens alike: the whole of society stared at numbers, visualisations, forecasts throughout the year - and still does. Every newly reported infection, every discrepancy between reports from different sources, every comparison with other diseases and correlation with the number of tests is closely observed, interpreted and discussed. Without much preparation time, authorities and journalists alike reacted to the need for information, seemingly uncoordinated and without knowing what the next week would bring.

And the demand for information continues to be enormous, as does the interest in the latest figures and data visualisations. Corona dashboards and maps have pushed website traffic to record highs that will remain unbeaten for a long time. Data-driven stories are often checked several times a day by users to get a picture of the current situation and to form an opinion on the usefulness of the measures taken by the policy-makers. But with such success comes great responsibility. More than probably ever before, data visualisations have an impact on opinion formation and public sentiment. At the same time, the dynamic circumstances of the data and sources leads us who visualise data to turn a blind eye here and there when it comes to meeting common quality standards. How have our choices changed, and how do we continue to manage the balancing act between accuracy and comprehensibility?

Quality etiquette in data journalism

Data journalism is first and foremost journalism. So all the quality standards of journalism - relevance, accessibility, accuracy and being up-to-date et cetera - also apply to data journalism. Added to this, data journalism often works at the intersection of journalism and science. Data-driven stories not only report on science, but are often themselves small research projects: which can give the audience the impression of scientific accuracy. Data journalists have a special responsibility precisely because they usually reach many more people with their simplified presentations than a scientific publication. Data journalists must therefore also be measured against scientific quality standards. They are in a constant balancing act between a scientific demand for accuracy and a journalistic demand for accessibility.

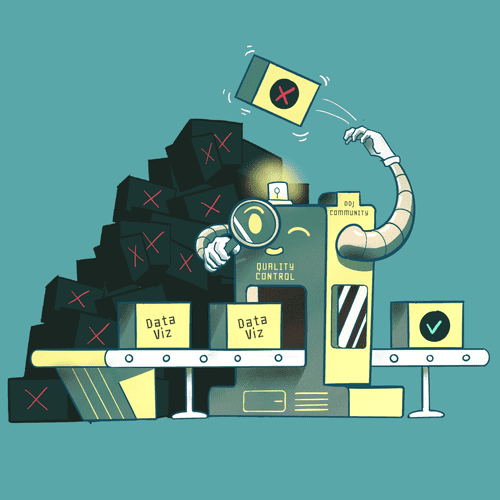

To begin with, we data journalists have quite high standards of scientific accuracy. Often enough, we reject forms of presentation or analyses when a gain in comprehensibility and speed cannot justify the loss of accuracy. We data journalists also critique each other's forms of presentation or choice of methods. So there is a certain internal quality control in data journalism.

The pandemic as an extreme balancing act

The pandemic has made our internal quality assessment extremely difficult. Some scientists probably feel the same way. The pressure for relevance and topicality has been so immense since spring 2020 that the scales - normally carefully balanced - are tipping sharply: Simplification and speed are required, while we desperately try to keep scientific accuracy as high as possible.

For example, data journalists showed the daily reported new infections in Germany in their dashboards and graphs already from the beginning of the pandemic - in the knowledge that the definition, reporting methodology, testing possibilities and publication times would be constantly changing from the beginning, which made the daily comparison of the numbers difficult, especially for lay people. Without the immense pressure to be relevant, many of us would probably have decided against using this data.

We would probably never have presented and compared data from so many different sources - countries with different reporting requirements, estimation methods and definitions - all together under other circumstances. But global figures from Johns Hopkins University, upon which most data-driven stories draw to describe the global situation, comprises exactly this kind of data. With any other topic, we would have said: we can't use these data sets, they are not comparable, the sources are not homogeneous. But in the current situation, they are simply the best approximations to reality we have - despite missing recovery reports from the Netherlands, sudden number corrections from countries like Turkey or Spain, or changing test labels in Italy (where at times only hospitalised people were tested).

When it comes to accessibility, data journalists have also strained their standards: a graph showing the development of the reproduction rate over time with uncertainty intervals would probably not have been implemented for the course of a flu season. Hardly anyone would have understood it. Because data journalists usually follow the principle: if readers first have to read an extensive explanation to understand a graph, then it doesn't fulfil its actual purpose - to make a complex topic understandable at a glance. And before the pandemic, no one would have understood what a reproduction figure is supposed to be without such an explanation. At least that is one thing the coronavirus has brought about: readers are more willing than average to read into more complex metrics or visualisations.

All in all, the info texts of data-driven coronavirus analyses read like long disclaimers: there are little asterisked sentences at every turn with references to reporting delays and other caveats. If so many disclaimers were necessary in all our projects - I don't think we would even start them. Or at least only target these projects for a scientifically interested audience.

Strained, not broken

Nevertheless, data journalists did not simply throw their quality standards overboard in the Corona pandemic. Instead, there were many internal and even industry-wide discussions. We continue to strive to inform as best we can without oversimplifying or misrepresenting things. Every small change in presentation or source selection is discussed intensively, the pros and cons are weighed and only what we consider justifiable is implemented - despite the pressure to be up-to-date. We work with what we have, make the best of it, try to offer the readers an orientation in this pandemic, and weigh up again and again how we keep the balance in this tightrope act - between being both up-to-date and comprehensible on the one hand and scientific accuracy on the other. It remains important that we do not stop questioning, critiquing and readjusting ourselves. Only in this way can we keep our balance and not fall off this tightrope.